Key points

- Digital-Ready Materials Will Become a New Commodity Class: As digital twins scale, fabrics will be judged not just by drape or durability but by scan fidelity, topology cleanliness, and simulation performance.

- VFX Pipelines Are Quietly Rewiring Fashion: Creature-animation workflows - particles, hair systems, volumetric rigs - are now the backbone of next-gen fabric simulation, outperforming traditional fashion tools for complex textiles.

- Volumetric Capture Is the New Loom: Motion, tension, crease patterns, and body-specific garment behavior are increasingly derived from volumetric and mocap data, not static CAD assumptions.

- AI Becomes the Materials Scientist of Digital Fashion: Machine-learning models trained on multi-scan datasets will predict fabric behavior, automate material ratings, and generate adaptive textiles optimized for real-time engines.

- Immersive Materials Break Physics and Business Models: Digital textiles can shapeshift, react, and evolve in real time, enabling reactive environments and modular garments that unlock new interaction models, new aesthetics, and entirely new sourcing ecosystems.

Full interview with Costas Kazantzis

In your recent work with volumetric capture and real-time rendering, how do you select physical materials that will translate effectively into interactive digital formats?

In Reskinning Reality, a large part of the process is about identifying and refining pipelines that allow us to reconstruct any type of fabric in 3D while overcoming the complexities of translating physical materiality into digital form. Rather than treating digital garments as simplified representations, I’m interested in how we can retain and even enhance their tactile and behavioral qualities within real-time environments.

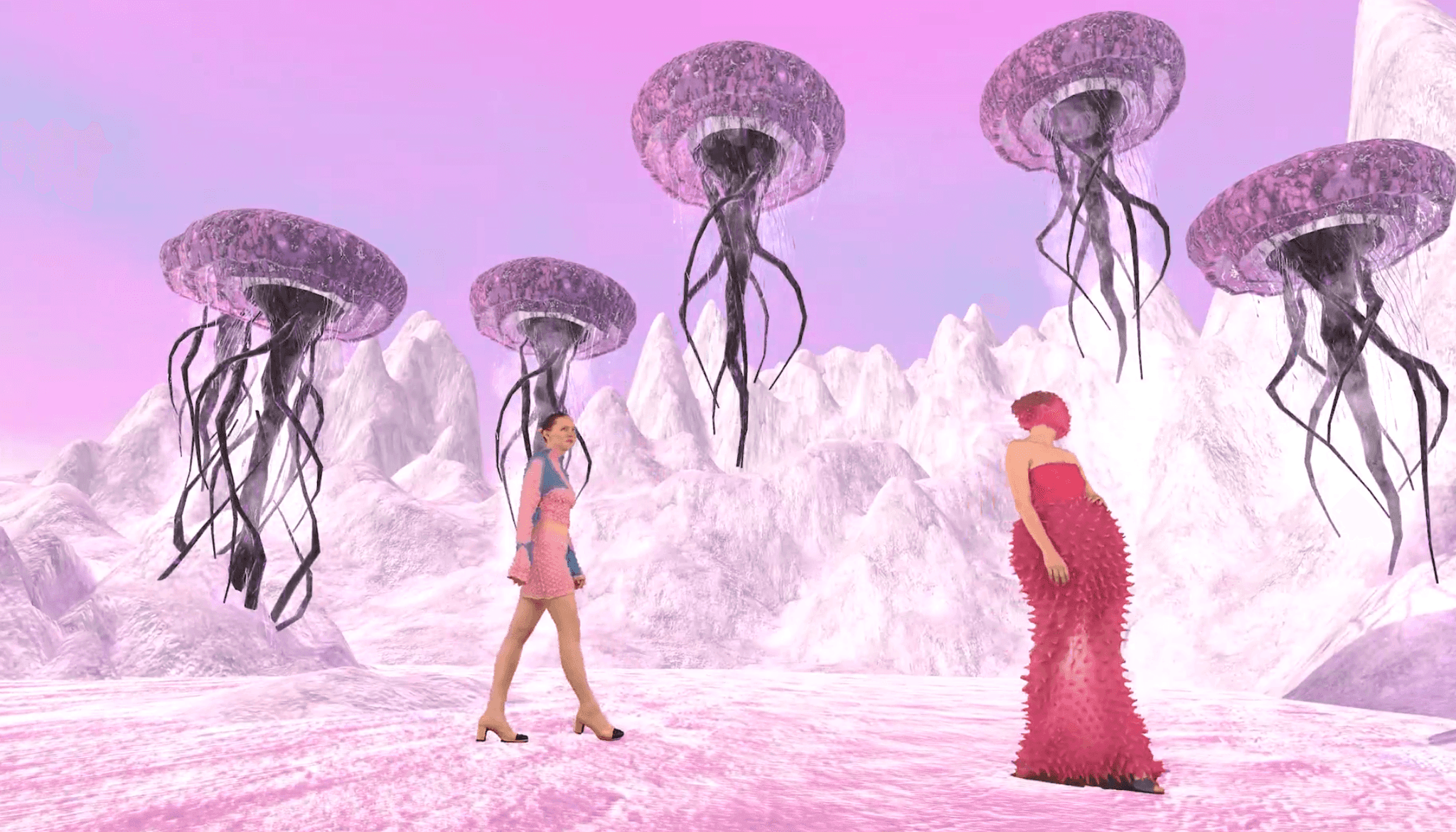

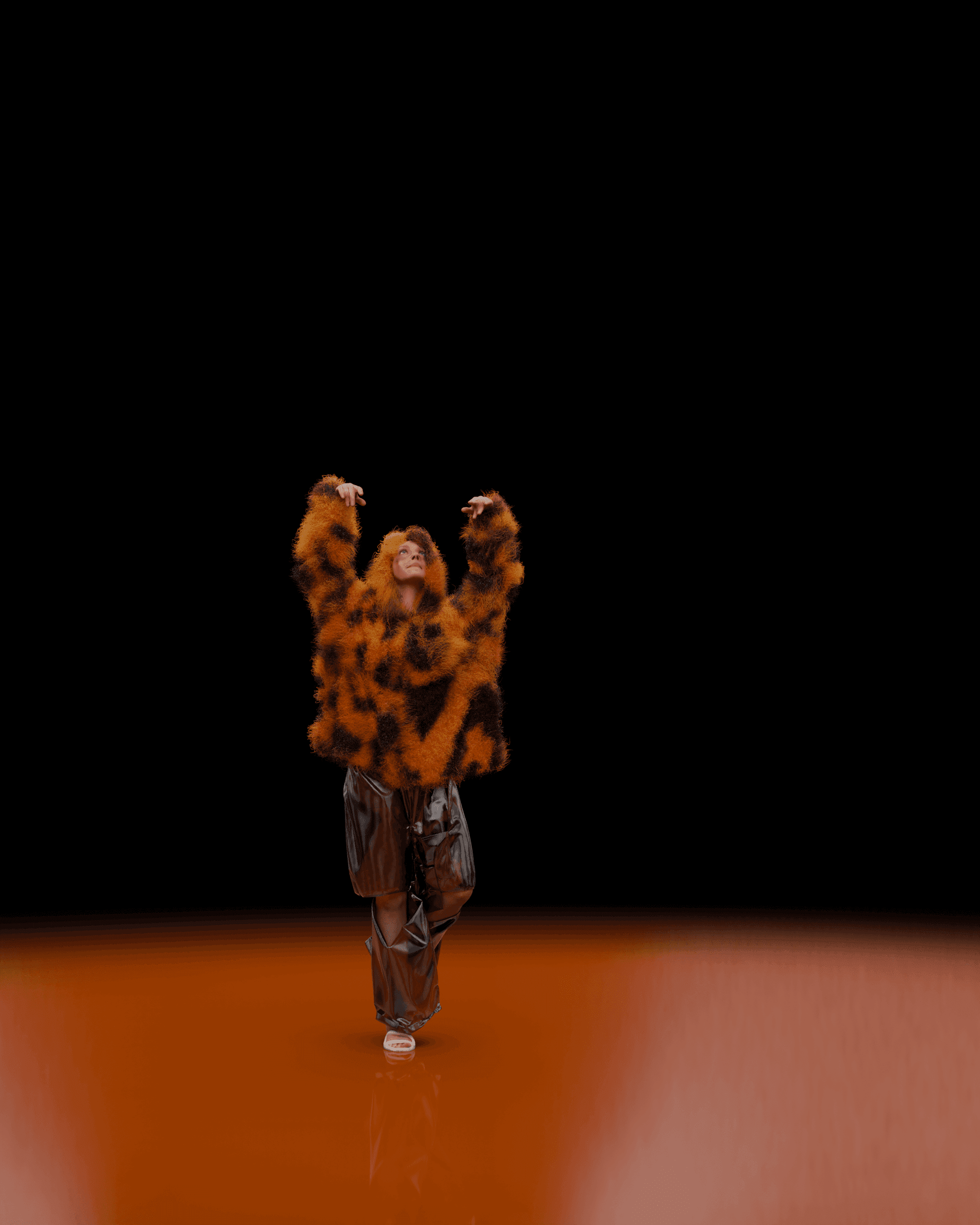

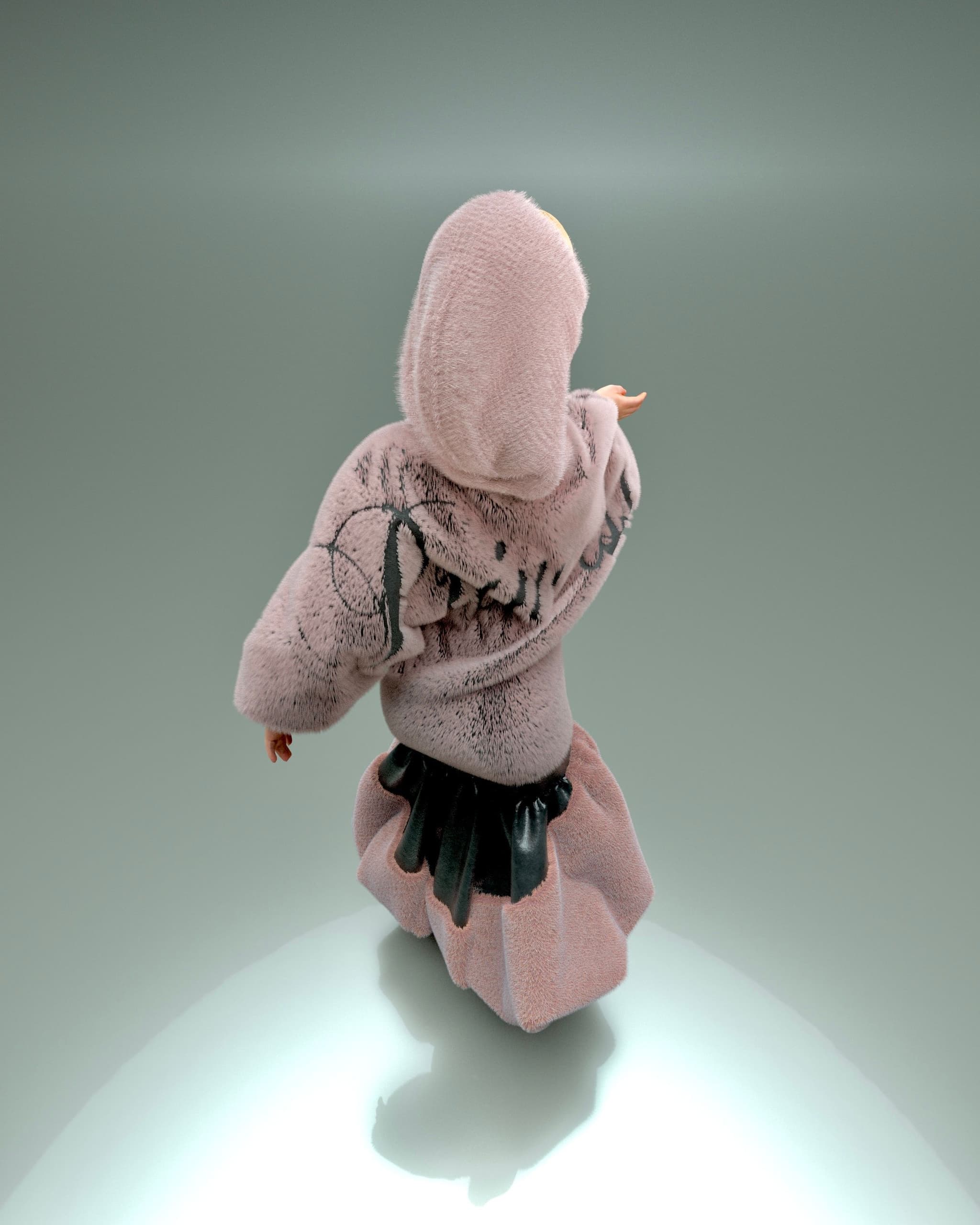

To do this, I’ve been looking closely at creative methodologies from the VFX industry and adapting them for fabric simulation. For instance, borrowing techniques used in creature animation and applying that level of nuance to how fabric moves, folds, and reacts. Alongside two brilliant interns that I had the pleasure to work with at the Fashion Innovation Agency (Tamaris Ellins, Imogen Fox), we’ve been experimenting with a wide range of materials, from fur and knitwear to embossed leather and delicate fabrics like lace.

The goal is to build an extensive knowledge base that allows any brand or designer collaborating with us to confidently digitize their garments, knowing that we can achieve both accuracy and expressive realism in the virtual space.

The fidelity of fabric scans clearly matters in digital fashion pipelines. What properties do you prioritise, texture, translucency, flexibility, when identifying a material’s readiness for digitisation?

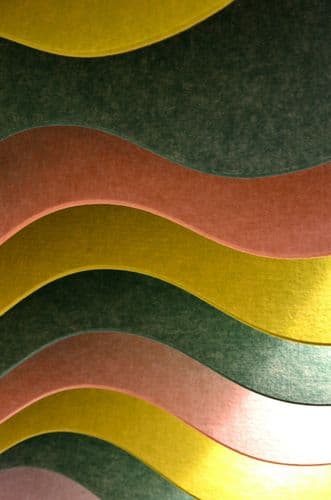

My work involves both scanning physical textures and recreating them as 3D materials using processes that combine tools such as HP Captis, Adobe Substance 3D, and MXR’s texture scanning pipelines, as well as workflows that design materials digitally through approaches inspired by the VFX industry. These pathways allow textures to be digitised with high accuracy, capturing key properties such as translucency, reflectiveness, and how they behave under different lighting conditions.

Through Reskinning Reality, the goal has been to develop a more advanced and adaptive material digitisation process. I’ve discovered that different pipelines perform better depending on the material. For example, when working with fur, fleece, or knitwear, I follow a workflow closer to those used in the VFX industry to create creature simulations. This often begins with base materials recreated in 2D within CLO or Substance, which are then refined through 3D particle or hair systems in software such as Cinema 4D, Blender, or Houdini.

Continuous research is essential to this work. Each fabric has its own behaviours and challenges, so identifying the optimal pipeline for each one allows our agency to remain flexible and capable of responding to the diverse needs of brands using the Reskinning Reality system.

When working across XR and game engines, how do you account for material behaviour, like drape, tension or reflectivity, that would be otherwise intuitive in physical fashion?

I’ve always believed that the digital world isn’t here to replace physical processes but to enhance and coexist with them, creating a more sustainable and inclusive future for fashion and the wider creative industries. The physical sense of fashion - how a garment feels, moves, and interacts with the body - remains essential to my work.

Technologies such as volumetric capture allow me to stay close to that physical reality. I’m drawn to it for its ability to create digital human assets that feel intimate and lifelike, enabling garments to exist and move authentically in 3D space. It also empowers brands that have traditionally focused on physical design to see their creations digitally, with all the nuance and beauty of real fabric movement.

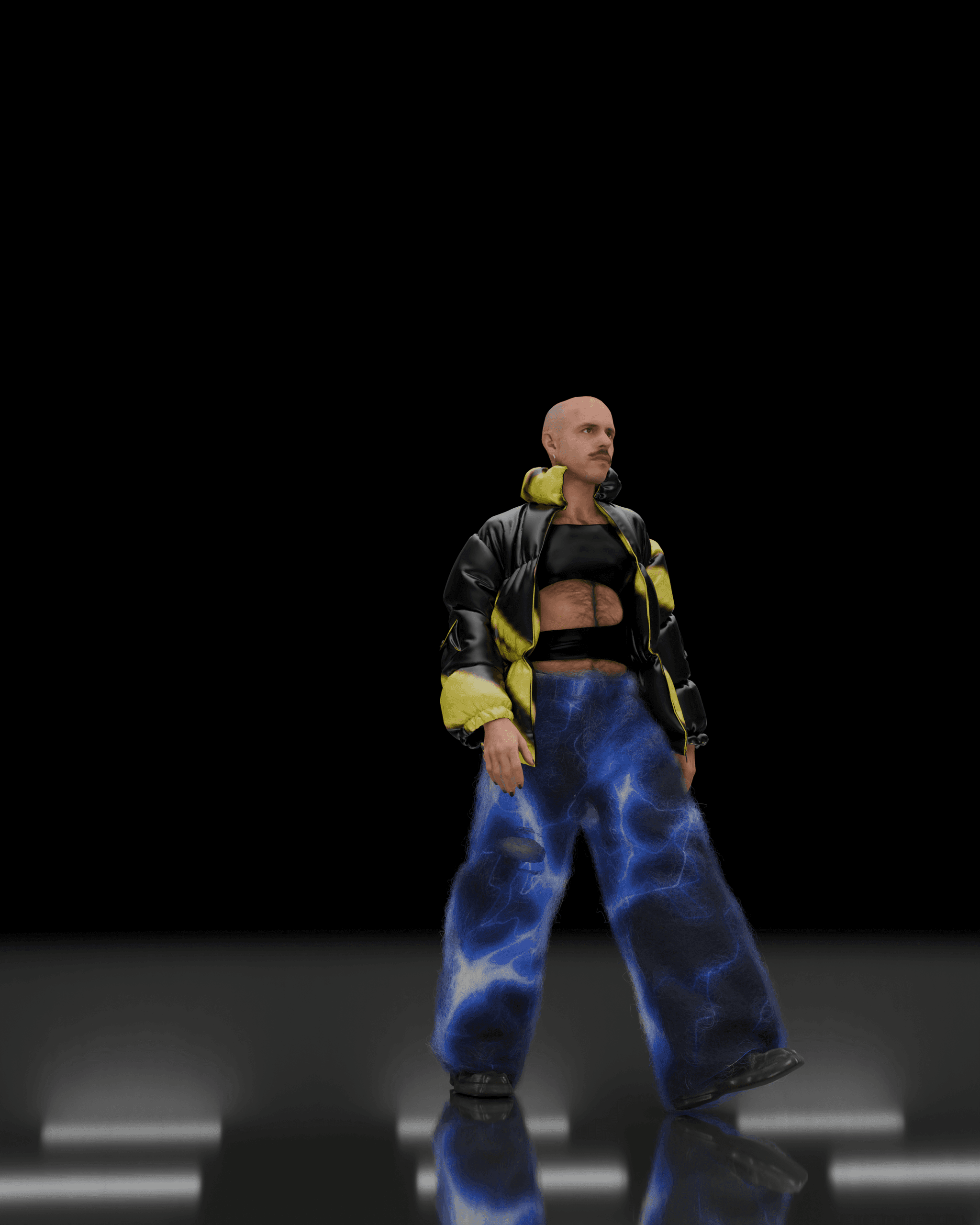

Within XR ecosystems, animation plays a crucial role. A garment is lifeless without a body, and each body reinterprets it differently. That’s why I’m deeply focused on integrating motion capture and volumetric techniques to bring digital fashion to life. I’m particularly interested in AR and spatial computing for the ways they allow physical and digital realities to coexist in a symbiotic and expressive way, something that resonates deeply with how I see technology and creativity intertwining.

AI is also becoming a significant part of this process. Machine learning models that can predict how fabrics crease, stretch, and behave under different conditions now enable real-time cloth simulation and more dynamic interaction between digital garments and physical movement. Having collaborated with major VFX studios like ILM and Digital Domain, I’m excited by how these advancements are pushing us closer to a point where digital fashion can truly capture the emotional and tactile qualities of the physical world.

In the “Reskinning Reality” project, you explored the idea of garments as interchangeable layers. How did material choice enable that modularity, and what constraints did you face?

Reskinning Reality is both a research project and a prototype for a service model that can be commercialised and offered to brands. The idea was to replicate existing physical fabrics with as much accuracy and creative depth as possible, while integrating 3D fashion design with VFX and real-time rendering pipelines. Material choice was essential to enabling modularity, we needed fabrics that could be digitally reconstructed, layered, and reinterpreted across different virtual bodies and environments without losing their physical characteristics.

The main constraints were largely technical. Volumetric capture files are very complex and heavy, and aligning them with 3D garment topology presented significant challenges. We also needed advanced computing power to simulate certain fabrics, especially those requiring VFX systems similar to those used for hair or fur dynamics. These constraints, however, became an opportunity to refine our workflow and develop a more efficient, flexible pipeline capable of handling a wide range of materials and garment types.

Can you speak to the role of sourcing in immersive environments, how do you find or commission physical materials that meet both aesthetic and technical expectations for digital use?

It really depends on the project and the type of collaboration. I’m interested not only in faithfully replicating materials to create seamless digital representations, but also in experimenting with how far we can push their transformation once they enter immersive environments.

While some projects require precise, physically accurate digitisation, others allow for more creative freedom, and that’s where things become most exciting for me. I’m fascinated by the idea of abstraction, of using material qualities in ways that are not possible in the physical world. By manipulating texture, reflectivity, or translucency, we can create otherworldly materials or reimagine familiar ones in completely unexpected contexts. That balance between realism and experimentation is what keeps the process both technically challenging and creatively rewarding.

As digital twins of garments gain traction, do you see a future where sourcing platforms also provide material ratings based on digital adaptability or scan performance?

Absolutely. I think we’re moving toward a future where materials will be evaluated not only by their physical qualities but also by how effectively they can exist and perform in digital space. As digital twins become integral to design, production, and retail, it makes sense for sourcing platforms to include metrics that assess a fabric’s “digital readiness” - how well it scans, simulates, and integrates into 3D or real-time environments.

This could involve standardized ratings for properties like surface reflectivity, translucency, or topology cleanliness after scanning, helping designers and developers understand how a material will behave in both physical and virtual contexts. I see this as part of a larger shift toward a hybrid material ecosystem, one that merges physical sourcing intelligence with digital adaptability.

At the same time, AI and computer vision will likely play a key role in automating these evaluations. By analysing data from multiple scans and simulations, we could develop predictive systems that guide designers toward materials best suited for digital workflows.

And while accuracy is important, we also need to keep an open mind about what a material can become in a virtual space. In the digital world, many physical properties can be expanded, re-purposed, or completely re-contextualised. The rules of physics no longer apply in the same way, which means materials can move beyond realism into the realm of imagination. That freedom - to stretch, dissolve, or transform - expands the creative potential of design, allowing us to rethink materiality itself as something fluid, evolving, and alive.

What tools or workflows have helped you most in building a bridge between physical textiles and their immersive digital counterparts? Are there gaps in current sourcing infrastructure that hinder this process?

Several technologies have been central to this process. I’ve worked extensively with fabric scanning systems from HP, Adobe Substance, and the London-based company M-XR, each offering different strengths in texture capture and material fidelity. Beyond these, I’ve also been experimenting with VFX workflows - particularly particle systems and hair simulation tools - to replicate the complex behaviours of fabrics in motion. It’s still a research journey, but I’m very optimistic about where these technologies are heading.

Digital design tools like CLO 3D have also played a key role in helping me create advanced 3D materials, while generative AI is opening up entirely new possibilities for material recreation from images or texture files. I believe we’ll see an increasing number of AI-driven tools that make these processes more intuitive and accessible for brands. At the Fashion Innovation Agency, we’re currently building an in-house machine learning team specifically to explore these solutions further.

The main gaps at the moment relate to computing power and cost. High-end material digitisation and simulation pipelines still require significant technical resources, which can make them challenging for smaller brands or independent designers. But as the technology evolves, I’m confident these tools will become much more accessible and scalable across the industry.

Many of your immersive works function as living, interactive environments. How do you evaluate materials for their responsiveness or potential in reactive design systems?

I’m very interested in how materials and digital environments can evolve through interaction - how they can sense, respond, and transform in relation to human presence. An example of this would be developing generative materials that react to movement, proximity, or gesture, allowing the environment to feel alive and participatory.

Working in real time is crucial here. Platforms like Unreal Engine give me the ability not just to create 3D worlds but to program modes of interaction within them. This means that materials can change their properties - colour, reflectivity, density - in direct response to what’s happening in the space. These dynamic systems transform digital environments into living organisms that breathe and shift alongside their audience.

What I find exciting is that this approach moves us beyond static digital design. It opens a space where the visitor becomes part of the creative process, influencing how the world behaves and evolves. For me, that sense of responsiveness, of co-authorship between human and digital matter is where the future of immersive design truly lies.

In collaborations like the CIRCA Prize installation, you integrate non-linear, queered spaces. How do you ensure the materials, physical or digital, don’t reinforce traditional norms but open new modes of interaction?

In both my artistic and research practice, I use queerness not only as a subject or form of representation but as a design mechanism - a way of thinking, making, and transforming materials. Rather than treating queerness as something to be depicted, I approach it as a method for reimagining how materials behave, evolve, and interact. This means questioning normative uses of materials, both physical and digital, and reappropriating them through a queer lens.

Queerness allows me to challenge the idea of permanence, to embrace temporality, fluidity, and change. In that sense, my materials are often designed to shapeshift, to remain open and unresolved. I’ve been experimenting with AI-driven workflows that enable real-time material transformation, where the outcome isn’t predetermined. This introduces an element of the unknown, of constant becoming, which feels very aligned with queer thinking.

Recently, I’ve been drawn to creating digital environments that evolve over time and, in some cases, are shaped directly by the audience. Interaction becomes a shared act of authorship, where materials and environments respond to presence, gesture, and collective engagement. For me, this is where queerness truly manifests - in systems that resist closure and instead invite participation, transformation, and multiplicity.

9. As the boundaries between fashion media, digital space, and fabrication continue to blur, what kind of material sourcing intelligence would empower your practice further, particularly when navigating both aesthetic goals and performance constraints?

What would truly empower my practice is a more integrated ecosystem, one where traditional craftsmanship, digital fabrication, and emerging technologies communicate seamlessly. I’m particularly interested in fostering cross-collaboration between different pipelines: between textile manufacturers and 3D material designers, between fashion houses and VFX or gaming studios. These intersections often reveal the most innovative solutions, bridging aesthetic ambition with technical performance.

AI plays a major role in this evolution. Machine learning systems can already analyse and classify material properties with extraordinary precision, helping us understand how fabrics might behave before they even exist physically. I imagine a future where AI models can predict how a material will drape or reflect light in specific conditions, or where they can generate entirely new digital textiles optimised for both realism and performance.

At the Fashion Innovation Agency, we’re already moving in this direction, building internal machine learning capabilities to explore intelligent material sourcing and generation. The goal is to create a system that not only streamlines production but also enhances creativity across the wider fashion ecosystem. For me, it’s not about replacing human intuition, but amplifying it, using AI as a collaborator that helps connect the aesthetic, the technical, and the imaginative.